istio 1.3.0 安装测试

1. 简介

istio 目前最新版本为1.3.0,支持helm、ansiabled的安装方式,推荐通过Helm安装,可以支持很多精细的配置。

本文演示了helm3和istio 1.3的安装过程。

2、下载helm

helm 3相比helm 2.14.3变化还是比较大,跟istio的兼容性好像还有问题,推荐安装helm 2.14.3,后面的实验都是用2.14.3

(1)安装helm3

helm 3实现了绿色安装,下载解压就可以直接运行helm命令。

mkdir -p ~/helm3 cd ~/helm3 wget https://get.helm.sh/helm-v3.0.0-beta.2-linux-amd64.tar.gz tar -zxvf helm-v3.0.0-beta.2-linux-amd64.tar.gz cp helm /usr/local/bin/helm3

(2)安装helm2.14.3

mkdir -p ~/helm cd ~/helm wget https://get.helm.sh/helm-v2.14.3-linux-amd64.tar.gz tar -zxvf helm-v2.14.3-linux-amd64.tar.gz cd linux-amd64 cp helm /usr/local/bin helm init --service-account tiller --skip-refresh --tiller-image junolu/tiller:v2.14.3 //检查是否安装成功 helm version

3、配置helm charts的加速仓库

添加微软的chart仓库,这个仓库与官网的charts仓库更新比较同步

helm repo add azure http://mirror.azure.cn/kubernetes/charts/ helm repo update helm search mysql //测试helm charts repo是否正常访问

4.下载istio

从官网下载istio1.3.0

mkdir -p /root/istio cd /root/istio wget https://github.com/istio/istio/releases/download/1.3.0/istio-1.3.0-linux.tar.gz tar -xvf istio-1.3.0-linux.tar.gz

5. 配置helm的istio仓库

helm repo add istio.io https://storage.googleapis.com/istio-release/releases/1.3.0/charts/

6.安装istio

使用默认配置安装istio

(1).创建namespace,用于存放所有istio组件

kubectl create namespace istio-system

(2).创建crds自定义资源

helm template install/kubernetes/helm/istio-init --name istio-init --namespace istio-system | kubectl apply -f -

或则

helm install istio.io/istio-init --name istio-init --namespace=istio-system

(3).验证crds是否创建成功,一共23个

kubectl get crds | grep "istio.io" | wc -l

(4).安装istio组件

为了简化安装,我们使用values-istio-demo-auth.yaml这个配置文件,可以默认配置好istio的常用组组件

helm install install/kubernetes/helm/istio --name istio --namespace istio-system --values install/kubernetes/helm/istio/values-istio-demo-auth.yaml

或则

helm install istio.io/istio --name istio --namespace=istio-system --set gateways.istio-ingressgateway.type=NodePort --set grafana.enabled=true --set kiali.enabled=true --set tracing.enabled=true

(5).修改ingress-gateway的模式

系统安装时,默认的ingress-gateway模式是load balance,本例中我们安装在自己的kubernetes中,所以,不能使用load balance,修改为 Nodeport

helm upgrade istio install/kubernetes/helm/istio --set gateways.istio-ingressgateway.type=NodePort

或

kubectl patch service istio-ingressgateway -n istio-system -p "{"spec":{"type":"NodePort"}}"

7 测试httpbin

(1).安装httpbin

//创建httpbin pods和svcs

kubectl apply -f samples/httpbin/httpbin.yaml --namespace test

kubectl apply -f samples/httpbin/httpbin-gateway.yaml --namespace test

//修改gateway如下

cp httpbin-gateway.yaml httpbin-gateway1.yaml

[root@centos75 httpbin]# cat httpbin-gateway1.yaml

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: httpbin-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "httpbin.example.com"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: httpbin

spec:

hosts:

- "httpbin.example.com"

gateways:

- httpbin-gateway

http:

- match:

- uri:

prefix: /status

- uri:

prefix: /delay

route:

- destination:

port:

number: 8000

host: httpbin

- route:

- destination:

host: httpbin

port:

number: 8000

[root@centos75 httpbin]#

//取得ingress的地址和端口信息

export INGRESS_HOST=$(kubectl -n istio-system get po -l istio=ingressgateway -o go-template="{{range .items}}{{.status.hostIP}}{{end}}")

export INGRESS_PORT=$(kubectl -n istio-system get svc istio-ingressgateway -o go-template="{{range .spec.ports}}{{if eq .name "http2"}}{{.nodePort}}{{end}}{{end}}")

export GATEWAY_URL=$INGRESS_HOST:$INGRESS_PORT

//测试是否match 路由生效

curl -I -HHost:httpbin.example.com http://$INGRESS_HOST:$INGRESS_PORT/status/200

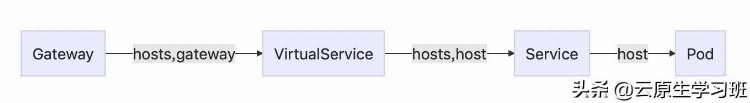

(2).解析httpbin的流量路径

(a) kubectl describe gateway httpbin-gateway

[root@centos75 ~]# kt describe gw httpbin-gateway

Name: httpbin-gateway

Namespace: test

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"networking.istio.io/v1alpha3","kind":"Gateway","metadata":{"annotations":{},"name":"httpbin-gateway","namespace":"test"},"s...

API Version: networking.istio.io/v1alpha3

Kind: Gateway

Metadata:

Creation Timestamp: 2019-09-13T17:04:46Z

Generation: 1

Resource Version: 2771114

Self Link: /apis/networking.istio.io/v1alpha3/namespaces/test/gateways/httpbin-gateway

UID: af318455-9246-463e-9d97-736004458250

Spec:

Selector:

Istio: ingressgateway

Servers:

Hosts:

httpbin.example.com --(1)需要同virtualservice的hosts匹配

Port:

Name: http

Number: 80

Protocol: HTTP

Events: <none>

(b) kubectl describe virtualservice httpbin

[root@centos75 ~]# kt describe vs httpbin

Name: httpbin

Namespace: test

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"networking.istio.io/v1alpha3","kind":"VirtualService","metadata":{"annotations":{},"name":"httpbin","namespace":"test"},"sp...

API Version: networking.istio.io/v1alpha3

Kind: VirtualService

Metadata:

Creation Timestamp: 2019-09-13T17:14:48Z

Generation: 1

Resource Version: 2772158

Self Link: /apis/networking.istio.io/v1alpha3/namespaces/test/virtualservices/httpbin

UID: e72006ab-45ee-4e63-90a2-6fade656ea60

Spec:

Gateways:

httpbin-gateway --(2)指定本virtualservice定义的流量规则适用的gateway

Hosts:

httpbin.example.com --(3)同gateway定义里的hosts匹配

Http:

Match:

Uri:

Prefix: /status

Uri:

Prefix: /delay

Route:

Destination:

Host: httpbin --(4)指定后端接收流量的service或ServiceEntry的名字

Port:

Number: 8000 --(5)后端Service或ServiceEntry的接收端口

Events: <none>

(c) kubectl describe service httpbin

[root@centos75 ~]# kt describe svc httpbin

Name: httpbin

Namespace: test

Labels: app=httpbin

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"app":"httpbin"},"name":"httpbin","namespace":"test"},"spec":{"...

Selector: app=httpbin

Type: ClusterIP

IP: 172.18.145.175

Port: http 8000/TCP --(6)后端service的对外端口

TargetPort: 80/TCP --(7)后端pod的访问端口

Endpoints: 192.168.148.94:80

Session Affinity: None

Events: <none>

(e) kubectl describe pod httpbin-7d9d5b55b9-52mxb

[root@centos75 ~]# kt describe po httpbin-7d9d5b55b9-52mxb

Name: httpbin-7d9d5b55b9-52mxb

Namespace: test

Priority: 0

Node: centos75/10.0.135.30

Start Time: Sat, 14 Sep 2019 00:26:47 +0800

Labels: app=httpbin

pod-template-hash=7d9d5b55b9

version=v1

Annotations: cni.projectcalico.org/podIP: 192.168.148.94/32

sidecar.istio.io/status:

{"version":"610f2b5742375d30d7f484e296fd022086a4c611b5a6b136bcf0758767fefecc","initContainers":["istio-init"],"containers":["istio-proxy"]...

Status: Running

IP: 192.168.148.94

Controlled By: ReplicaSet/httpbin-7d9d5b55b9

Init Containers:

istio-init:

Container ID: docker://42c5b3d84755502fe5048477585096ea35a9b33ee88bacde3c5b2241bd9935c9

Image: docker.io/istio/proxy_init:1.3.0

Image ID: docker-pullable://istio/proxy_init@sha256:aede2a1e5e810e5c0515261320d007ad192a90a6982cf6be8442cf1671475b8a

Port: <none>

Host Port: <none>

Args:

-p

15001

-z

15006

-u

1337

-m

REDIRECT

-i

*

-x

-b

*

-d

15020

State: Terminated

Reason: Completed

Exit Code: 0

Started: Sat, 14 Sep 2019 00:26:48 +0800

Finished: Sat, 14 Sep 2019 00:26:49 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 100m

memory: 50Mi

Requests:

cpu: 10m

memory: 10Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-r7slk (ro)

Containers:

httpbin:

Container ID: docker://50d746242eb3b1b87c1e40a3059890b6a3c2482334975452e50047010c2cb2c9

Image: docker.io/kennethreitz/httpbin

Image ID: docker-pullable://kennethreitz/httpbin@sha256:599fe5e5073102dbb0ee3dbb65f049dab44fa9fc251f6835c9990f8fb196a72b

Port: 80/TCP --(8)pod的访问端口定义

Host Port: 0/TCP

State: Running

Started: Sat, 14 Sep 2019 00:35:28 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-r7slk (ro)

istio-proxy:

Container ID: docker://84c019f39b6215950acb79e86593cb683d9344929ceabf7d07014586af01f782

Image: docker.io/istio/proxyv2:1.3.0

Image ID: docker-pullable://istio/proxyv2@sha256:f3f68f9984dc2deb748426788ace84b777589a40025085956eb880c9c3c1c056

Port: 15090/TCP

Host Port: 0/TCP

Args:

proxy

sidecar

--domain

$(POD_NAMESPACE).svc.cluster.local

--configPath

/etc/istio/proxy

--binaryPath

/usr/local/bin/envoy

--serviceCluster

httpbin.$(POD_NAMESPACE)

--drainDuration

45s

--parentShutdownDuration

1m0s

--discoveryAddress

istio-pilot.istio-system:15010

--zipkinAddress

zipkin.istio-system:9411

--dnsRefreshRate

300s

--connectTimeout

10s

--proxyAdminPort

15000

--concurrency

2

--controlPlaneAuthPolicy

NONE

--statusPort

15020

--applicationPorts

80

State: Running

Started: Sat, 14 Sep 2019 00:35:29 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 100m

memory: 128Mi

Readiness: http-get http://:15020/healthz/ready delay=1s timeout=1s period=2s #success=1 #failure=30

Environment:

POD_NAME: httpbin-7d9d5b55b9-52mxb (v1:metadata.name)

ISTIO_META_POD_PORTS: [

{"containerPort":80,"protocol":"TCP"}

]

ISTIO_META_CLUSTER_ID: Kubernetes

POD_NAMESPACE: test (v1:metadata.namespace)

INSTANCE_IP: (v1:status.podIP)

SERVICE_ACCOUNT: (v1:spec.serviceAccountName)

ISTIO_META_POD_NAME: httpbin-7d9d5b55b9-52mxb (v1:metadata.name)

ISTIO_META_CONFIG_NAMESPACE: test (v1:metadata.namespace)

SDS_ENABLED: false

ISTIO_META_INTERCEPTION_MODE: REDIRECT

ISTIO_META_INCLUDE_INBOUND_PORTS: 80

ISTIO_METAJSON_LABELS: {"app":"httpbin","pod-template-hash":"7d9d5b55b9","version":"v1"}

IST